Learning Portal UX Design

I was asked by Microsoft to work on a short-term assignment as a UX Architect to help create a proof of concept (POC) for a learning portal.

The Opportunity

A very large financial services client with over 700 branches in the UK requested if Microsoft would work on a short time-frame project to recommend how employee learning experiences could be improved. Learning systems were in-place and individually very effective, but these were not integrated and the user experience (for learners) wasn’t optimized, meaning training suffered as a result.

Constraints

This case study is unusual (for me) because from start to finish it lasted only 10 weeks (5 sprints) or as I prefer to remember it, 4 haircuts (different people), 3 birthdays and a video… well I thought it was unusual.

Project Team

It was agreed very early on this would be an agile project with 2-week Sprints, so 5 in total. Sprint 1 was mostly research and the last sprint was used for software integration, wrap-up and final delivery (including a video of the solution).

Project & Scrum Master

Technical Architect

2 X Developers

UX Architect (myself)

Tester

Intern (to observe a rapid Agile Project in action)

Client: stakeholders, subject matter experts & instructional (eLearning) Designers

Remote Working

Almost all design and development was done remotely and much of this was enabled using Microsoft Technologies especially Office 365 and Microsoft Teams.

Individuals were geographically distributed across the UK and Sweden and partly in the USA (for video editing). We had regular early-morning daily stand-ups (with the client), held video calls several times per day (as required), shared access to all project documents and even edited documents simultaneously to save time. UX artefacts like research findings, digitized sketches, personas, user journeys, wireframes and links to Axure Prototypes (AXshare) were all facilitated by Microsoft Teams. Working software was often demonstrated by screen/desktop sharing.

2 days per sprint face-to-face

Sprint Retrospectives and Planning was usually face-to-face for 2 days at the start of each Sprint Cycle (so every 2 weeks). However, we met virtually several times each day so when we did meet face-to-face there was rarely any surprises.

UX Process and Approach

When starting any new project I understand practically nothing about the client, their industry, products or services, so I have to rely on tried and tested assumptions that help me climb the learning curve (and add value) as quickly as possible.

My starting assumptions are usually:

Initial requirements are often vague.

There are (usually) multiple solutions to a problem.

It’s often not clear what the problem is we are trying to fix.

We learn from our mistakes.

Most people aren’t sure what they need until they can see (and experience) it.

Design Steps

I usually apply basic design thinking steps to get me started. Although these steps may appear sequential, (e.g. do step 1 then step 2), it rarely works out like this. I jump around and even (shock) miss steps out altogether.

A Divergent and Convergent Approach

I try to generate lots of ideas (initially by sketching) evaluate any good ones (trash the others) and refine and repeat until I can converge on a potential solution. This design approach is called the Double Diamond and is divergent and convergent thinking.

Understand (requirements)

Research

Insights (research findings)

Brainstorm ideas (ideation)

Prototype

Test

Deliver (Outcomes)

The Double Diamond

This graphic is created by the Design Council and is licensed under a CC BY 4.0 license.

Research

Over a 2 day period on client site representative users, subject matter experts, course designers, stakeholders together with the Microsoft team gathered for an initial envisioning workshop. The project scope, deadlines, approach and availability of team members was agreed. From a UX perspective, we obtained a lot of useful information and recorded this interactively on wipe boards covering all 4 walls of our meeting room.

Envisioning outputs included

Problem statements

Early on stakeholders identified finding learning and being notified of mandatory learning was a major issue (this was validated later during research).

Personas

At this stage we identified proto-personas based on stakeholder assumptions, to be validated during research.

User Journeys

For each of the personas identified we sketched out typical user journeys. Along the way, we identified some problem areas and alternate flows.

Ideation

With everything we learned in these early sessions, and collectively documented on the walls of the meeting room, it was now time to brainstorm ideas (we had many). Some ideas proved more popular than others these include:

What if finding learning wasn’t a problem at all?

Nothing needed to be “found”, but instead the solution would (automatically) find courses for you. In other words, solving the main problem. This idea generated a lot of discussions.What if learning was a game?

A user earned points for training they had completed and it was fun.What if a learners’ experience could be easily captured and shared for the benefit of others (who haven’t taken the course) simply by talking about it into a camera? The solution would then automatically translate their audio spoken into text and index this to make it easy to be found.

Interviews

With the help of the Technical Architect and client colleagues, I interviewed several people from different parts of the organization in different locations over a two-week period. Mostly this was face-to-face but sometimes due to location and availability constraints, telephone interviews were conducted instead.

All the interviews were recorded (handwritten initially and typed up later) on questionnaire templates I specifically created for this project. I tried to use the same questions for each participant for consistency and comparison. The interviews were very informal lasting 15-20 minutes typically.

Research outputs included

Interview Questionnaire template & guidelines

Interview schedule

Captured interview text

Personas are based on real people.

Actual user journeys.

Top insights:

Users can’t find the training courses easily

Users often don’t have time, they want to know what mandatory training they need.

Users want to own their training so they can manage their careers better

UX Priorities

It was clear from the research that the new software should prioritize the insights because this would form the basis of a Minimal Viable Product (MVP).

The key priorities were:

Make searching for training courses easier

Support time-poor learners by proactively notifying them of their training needs

Allow learners to manage their own training

Ideation

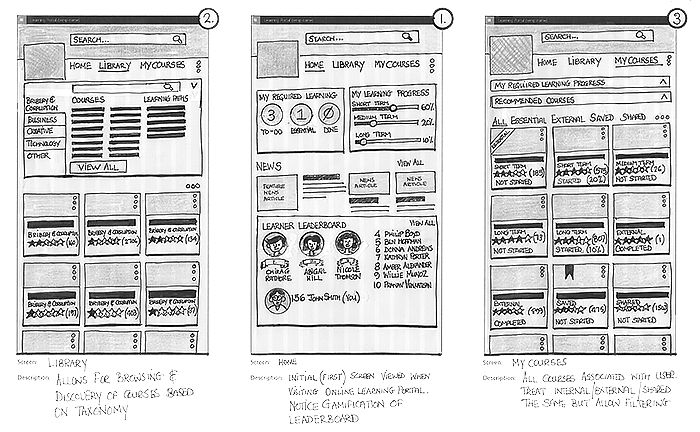

I sketch concepts, wireframes, and user journeys all the time, however on this project in order to stay ahead of the development almost all of the early UI designs were hand-drawn as well. I didn’t have the time to produce accurate wireframes.

Ideation outputs included:

Concept diagrams

Anatomy of a course & learning path

Alternate course card designs

Wireframes

Sitemaps

Logo ideas (the client wanted a new logo)

Solution

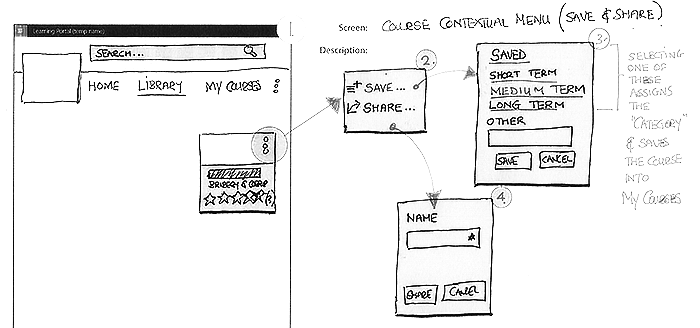

Independent of the source of the learning materials (different LMS Systems) courses and learning paths had the same look and feel and contained the same information. A card-based metaphor was liked by the client from early sketches and since this was a Sharepoint solution using a custom Document Card UI element made sense (and saved a lot of development time).

Improved Searching for courses

The UX design allowed searching for courses from multiple sources on any page. In other words, integrating multiple LMS systems but presenting search results consistently using a card metaphor. Any course displayed would also include related courses and functionality was added to recommend, review, share and save courses (to My Learning).

Just tell me what I need to do

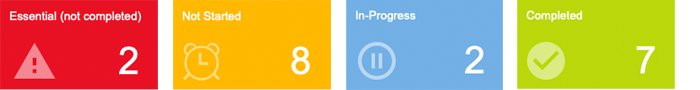

The UX POC introduced My learning Status Information where at-a-glance a learner could see (without them having to think) if there were courses outstanding they needed to complete. A colour-coded traffic light scheme was introduced (but for accessibility, not the only indicator). So for example, if something was red it was important and needed attention soon if Green it was completed.

Managing my own training

The UX POC enabled users to own their own learning by providing an easy way to collect organize and monitor progress against any course they have access to. This way users can complete training needed for their job but also take additional (aspirational) training for a career move within the organization.

Testing

The UX designs were usually ahead of production developed software. These included wireframes and interactive prototypes and constantly evolved based on continuous feedback (not just for each sprint). We essentially followed a Rapid Iterative Testing and Evaluation (RITE) approach. Simultaneously production software was tested using test scripts based which were derived from user-stories which in turn where based the UX Designs.

Outcomes

This was a POC project only so it wasn’t possible to gather definitive testing on the outcomes of the new software (since it wasn’t implemented fully). So instead we demonstrated the solution to everyone involved in the initial research then asked for their feedback.

Video

The clients wanted to see evidence (a video) showing that the outcomes intended were achieved (or would be achieved if the POC was live). This video could also be used for justification for additional funding to fully implement the solution company-wide.

Goals

The video was designed to demonstrate the software developed, explain the research and insights obtain then record users reaction to the solution.

Planning

I worked with the Technical Architect to agree on an approach. There were some branding considerations including background music and restrictions on B-roll footage (to protect privacy or sensitive information) apart from this, the style of video was open.

I’ve been lucky to work on a small number of videos before so followed the same approach that seemed to work:

Agreed the video goal.

This was easy because it had to demonstrate the outcomes we planned for were met and most importantly help the client decide if the POC solution was worth developing further?

We choose a style/direction

This wasn’t so easy and we scanned YouTube and other resources for inspiration. We changed our minds a few times.

The style/direction could have been: problem/solution, explanatory, showcase features, and more. However, we decided a “testimonial” style would work best with the time and resources we had. This meant interviews, soundbites, and quotes (from real users) using or reviewing the solution each recording grouped around specific outcomes.

Setting the tone

We decided this needed to be relaxed business casual matching the client workplaces.

Duration

The client expressed anything longer than 4 minutes was too long.

Script

Using the research findings and insights obtained I drafted a script working with the help of the Technical Architect to ensure all the main UX Personas were represented and the main outcomes discussed.

We used as much as we could from the user’s own recorded feedback. However, on the day of the video shoot, I think almost every script was tweaked by the participants. It was important what they said was their own words and how they would normally express it.

Storyboard

I created storyboards (in PowerPoint) for each participant which was agreed upon before the day of the video shoot.

Each storyboard scene was annotated with:

Scene#

Shot#

Location,

Participant

Description, including key points like particular outcomes referenced

Script

The storyboard was the default plan and was updated several times on the day of the video shoot (something I wouldn’t recommend doing it created a lot of work).

Recruited participants

This was easy enough. The potential list of participants was based on the list of users involved in the initial research or who were part of the client team. Some preferred not to be on camera and others weren’t available. However, there was a strong sense this was the client’s solution, not one being imposed upon them so most wanted to contribute to supporting it.

Shoot video

Logistically this was very challenging. Coordinating participants’ availability in different locations, in busy offices, updating and agreeing on the scripts then coaching participants for recording was fairly stressful.

In addition as much B-roll/background footage needed to be captured in order to provide context for the speakers on-camera.

Before shooting any video if the participant hadn’t already seen the POC solution I had to demonstrate this using a combination of production software, prototype screens and in some cases, A3 screenshot print-outs.

Before recording participants’ feedback, I asked the following questions (notice these are based on outcomes, not features):

Does the POC match your expectations? Anything missing?

Would the POC make finding courses easier (or not)?

Would the POC make it easier or for you to understand what courses you should complete?

Do you think the POC would help you manage your learning?

Do you think the POC is easier to use (or not) than the current solutions?

Edit video

Video recording was done by a Microsoft team on the days of the video shoot then 24 hours later edited remotely (in the USA). Background music was added (selected by the client) B-roll shots were added and a few different iterations were worked on before the client was happy to sign-off.

Result

The short (4 minutes) video produced was a huge success. It summarised the entire project by:

Introducing the problem

Explaining the current user experience

Discussing the research findings and insights obtained

Demonstrated the software (solution)

Played back actual learners’ responses (to the solution) with their own feedback

Outlined the (potential) business outcomes and impact of the solution